This blog was co-authored by Marco Kuendig, CTO at copebit AG, Switzerland and Simone Pomata, Senior Solutions Architect at AWS Switzerland.

» Original Link to this Blog

Who is copebit?

copebit is an AWS advanced consulting and software development company based in Zurich, Switzerland. We have been working with AWS for many years implementing AWS-based cloud solutions for clients every day.

copebit was founded in 2016 as a born-in-the-cloud company. It initially built its consulting offering in the public cloud space and got quickly certified as an AWS Consulting Partner. Since 2018, copebit has grown substantially and added many clients to its customer base. In addition, in 2020, copebit became a certified AWS Advanced Consulting Partner.

Since then, copebit has helped a number of companies building microservices-based applications running on container platforms, and we do have several employees that are certified in container solutions. In addition, we also operate AWS environments for ourselves and our clients with DevOps principles and methods with our support offerings.

Early phase, experience, and challenges

We started building our service offering in 2018 using the AWS Service Catalog. We have built many AWS environments for our clients based on AWS CloudFormation and adapted these CloudFormation templates to be customizable and modular, and published them to our copebit Service Catalog for downstream consumption. We built many products in the copebit Service Catalog. The first step was always to set up the product foundation, including networking, monitoring, security, and other best practices. Then we built the core layers of the product, including compute, data, and application logic.

More and more clients started to request solutions to host containerized applications. For that, we often use Amazon Elastic Container Service (Amazon ECS), combined with CI/CD pipelines based on AWS CodePipeline (mainly with the blue/green deployment approach).

Relatively early in the journey with containers, we realized that we needed additional capabilities in how we managed our CloudFormation templates. Our application developers needed a self-service way to build and deploy new microservices with ECS quickly, without involving the cloud platform team.

The challenges with the relatively static approach of CloudFormation templates were mainly about complexity and adaptability. Many microservices share similar characteristics but still have various moving parts that are difficult to encode in a static template. In an attempt to improve the flexibility of our CloudFormation templates, we started to build complex bash scripts. But we quickly found out that these bash scripts made the handling overly complex. Also, CloudFormation is declarative, and using bash with its imperative operating mode was not a natural fit. This approach often broke the declarative model.

The AWS services of our stack—Amazon ECS, AWS CodePipeline, AWS CodeBuild, AWS CodeDeploy, Amazon CloudWatch Synthetics canaries, sometimes even AWS App Mesh—started to become a challenge to integrate with each other and to fine-tune for an optimal setup.

We therefore started to explore other possibilities and found AWS Proton. AWS Proton is a fully managed service for deploying container and serverless applications that enables platform administrators to achieve greater governance and developers to achieve greater productivity.

AWS Proton

Overview

At copebit, we leverage AWS Proton as a developer self-service portal for microservices.

With copebit growing four-fold over the last three years, we had to structure the organization a bit more. We have established an agile, scalable organization that is inspired by the scaled agile framework (SAFe).

copebit is now structured into scrum teams, each doing their own projects and software development. Each team is focused on specific clients and also owns a particular software stack. One of these teams in particular is using AWS Proton to streamline their development work.

There are two distinct entities in this team: platform admins and application developers. The platform admins are real AWS specialists and are close to the AWS platform and services. They stay up to date with what is happening in AWS and also create the AWS Proton templates. On the other side, the application developers don’t have to learn all the AWS technologies in detail. But with the prepared AWS Proton templates, they can still use AWS in a very efficient and agile way.

The application developers are experts on software programming and can very easily use these highly sophisticated templates to provision and run their newly created applications. Thanks to this approach, the productivity of our developers increased by an average of 15 percent.

Harnessing the Jinja engine

A big advantage of AWS Proton lies in the possibility of using the Jinja engine. Jinja is a templating language that makes it easy to replace values in CloudFormation templates. Before, we were able to parameterize the templates with the parameters option of CloudFormation. But with Jinja, we can define a lot more complex patterns, advanced customizations, and replacement strategies. With Jinja, we almost have a programmable CloudFormation template that can be very easily adapted to many different use cases without having to write overly complex templates. You can almost think of it as an additional abstraction and customization layer on top of CloudFormation.

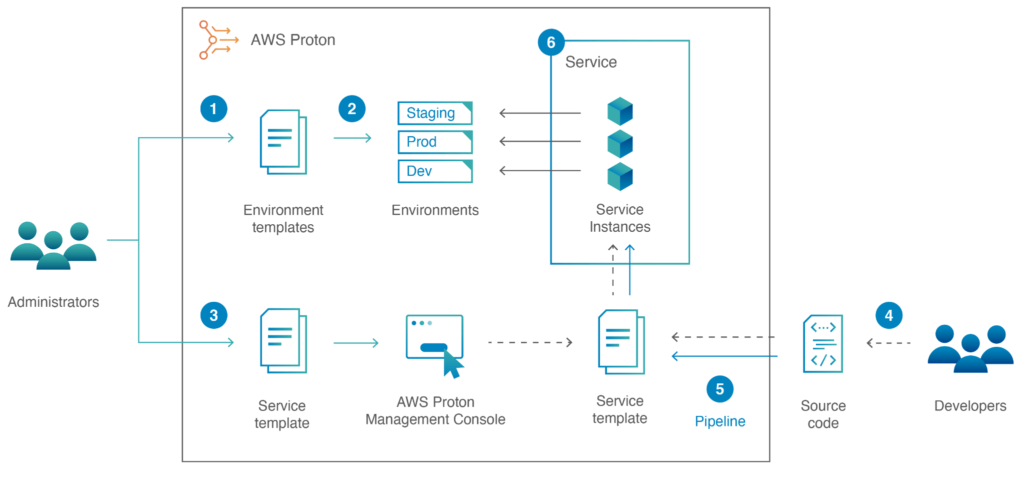

In addition to the Jinja rendering capabilities, AWS Proton offers other advantages. For example, AWS Proton implements a very prescriptive administrator and developer roles relationship at its core. Administrators not only define the templates but also create and manage environments, while developers own the services. Environments and services are tied, and services have visibility in the environment characteristics. These characteristics can be used to customize the service deployments (Jinja just adds a lot more customization flexibility to this core foundational model).

Probably more importantly, AWS Proton is application lifecycle aware. An AWS Proton service is more than generic templated infrastructure; it includes application-aware infrastructure that allows application code to be deployed (for example, Amazon ECS, AWS Lambda, or Amazon EC2). This application awareness allows customers to leverage more opinionated workflows that remove part of the undifferentiated heavy lifting associated with building developer portals for software delivery.

In Figure 1, you see a high-level overview of AWS Proton and its main components.

Figure 1. Simple AWS Proton workflow.

Figure 1. Simple AWS Proton workflow.

To build applications, we need to lay a good, secure, and highly automated foundation. For that, AWS Proton uses the environment templates approach.

Environment templates

Shared infrastructure components are codified in environment templates. In environment templates, we define the networking, the container hosting platforms like ECS clusters, as well as other services that are used by multiple microservices (such as databases, for example).

These templates can then be used to instantiate multiple environments like development, testing, and production. As these templates are now highly customizable, these environments will look similar but still have the flexibility to adapt to their specific needs.

As we already have many infrastructure templates built in our Service Catalog, we were able to quite easily adapt these to work with AWS Proton. Visit our AWS Proton documentation to learn more about how to author templates.

In addition, the copebit services (defined as products in AWS Service Catalog) and AWS Proton can work hand in hand. For those features that are only required once in the AWS account, we use AWS Service Catalog products to bootstrap the account. We call that the account preparation phase. The account preparation phase for us deploys multiple security and compliance services, which we establish in each account that we operate, regardless of workload type. Some examples of are AWS Budgets, AWS Config, Amazon GuardDuty, and some IAM controls, as well as security-focused AWS Lambda functions.

On top of that, our platform and infrastructure admins prepare environment templates that describe the network, clusters, and other infrastructure resources. Then, the team responsible for the base infrastructure can use environment templates to create the necessary infrastructure services like ECS clusters.

A great infrastructure that is highly automated and secure and provides integrated networking is fantastic but doesn’t yet make an application. For that, we need the actual microservices built and integrated. And here the service templates of AWS Proton come into action.

Service templates

We have used containerized microservices for years. Our developers are building and testing their microservices on their local workstations with Docker and similar container solutions. To deploy these container images to run as microservices, developers can now use AWS Proton service templates in a self-service manner. A developer that needs to launch a new part of the application will write the software, containerize it, and “order” a new service based on the service templates of AWS Proton. The service templates are built and maintained by the platform admins. As a result, developers don’t have to spend time managing the infrastructure and can focus on the application.

The service that is launched will then have everything necessary to start the new microservice. It will have a CI/CD pipeline and will, on the next commit, automatically build and deploy the image to ECS clusters that use AWS Fargate, a fully managed compute serverless infrastructure to run containers.

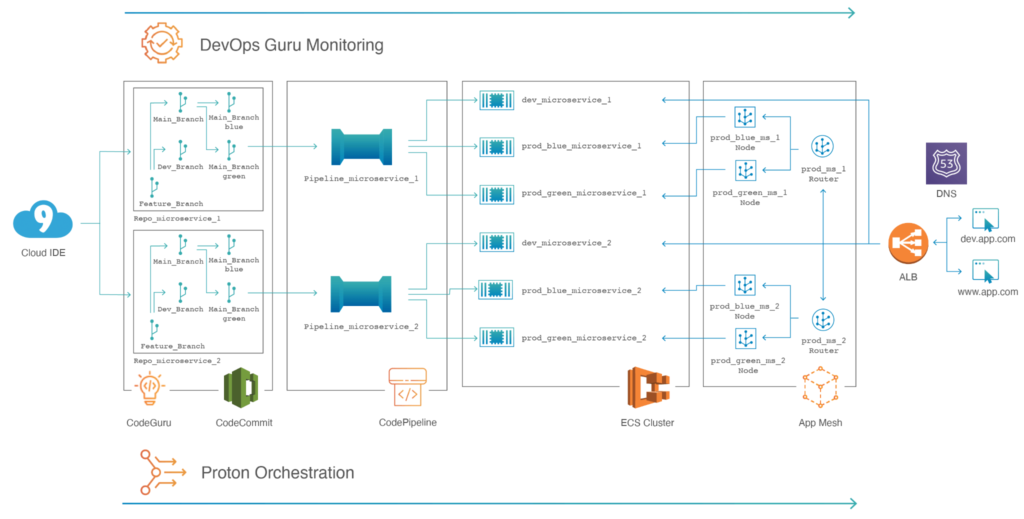

Figure 2. Sample application managed with AWS Proton.

A new microservice does not only need to run, but it also needs to be properly deployed and monitored. All this is also codified in our service templates. At launch, a service template will establish:

- CI/CD Pipeline with

- CodeBuild

- CodePipeline

- CodeDeploy

- Application Load Balancer

- ECS services

- ECS task

- Application Load Balancer integration with ECS

- Monitoring with metrics, alarms, and canaries

Our CI/CD pipelines, which we have built over the years, have become quite complex. They build the images, customize the images if necessary, and have a blue/green style deployment approach included. Utilizing blue/green is important for us, as we have the best flexibility and control as well as safety included in the deployment. Before the traffic hits the new version, we can test it, and if something goes wrong after the deployment, we can fall back on the former software version without deploying the old software again. It still runs in the former blue environment, and a fallback can be done in seconds.

As the platform admins also have that codified in the service template, every microservice automatically gets the benefits of that blue/green deployment approach. The good thing is that developers do not need to be experts in this domain because everything is codified for them, and they only consume the templates that embed these best practices.

For our own applications as well as for infrastructures that we build as an AWS partner for our clients, we often have a minimum of three environments (development, testing, and production) and sometimes even more. Having all these environments built in a highly standardized fashion based on well-tested and highly automated templates helps reduce the efforts necessary to build and maintain these environments.

Imagine an application with six microservices deployed over three environments and each microservice having five different components (CodePipeline, ECS, Application Load Balancer rules, CloudWatch, canaries, etc.). Multiplied, that leads to dozens (6x3x5=90) of components spread across many different services and environments. Having it based on the same standardized templates makes the setup and maintenance much more streamlined. We do all this across multiple AWS accounts, and we leverage AWS Proton multi-account awareness to solve this challenge.

Ongoing maintenance

AWS Proton also helps us dramatically as the environment and service templates are versioned. Each deployed environment or service is built on a specific template version. If these templates need to be updated, a new version can be thoroughly tested and then published by the cloud platform teams that are experts in that domain. The application developers can then again do these updates in self-service mode while progressing through the various test stages. This brings us a much smoother release process regarding infrastructure-related updates for both environments and service templates.

Summary and next steps

AWS Proton empowers our developers. They can use well-tested templates that have been provided by the cloud platform team. The developers don’t need to know all the details of the AWS services and can still use these services in a safe and efficient manner. This way, they are more productive and agile on application development. As already mentioned, their productivity has increased on average by 15 percent.

As we had a lot of experience building infrastructure solutions based on the AWS Service Catalog, it was relatively easy for us to start building with AWS Proton. The ideas and techniques are similar, but AWS Proton provides a specific software deployment life cycle awareness that enables us to provide the control layers that our container deployments need. Also, our investment in AWS Service Catalog can be further leveraged and combined with the power of AWS Proton.

If you are interested in how copebit can help your business, please visit our website and get in touch. If you are interested in giving AWS Proton a spin, please start here.